Disaster Recovery

Disaster Recovery

EC2 Menu

EC2 Menu

Disaster Recovery

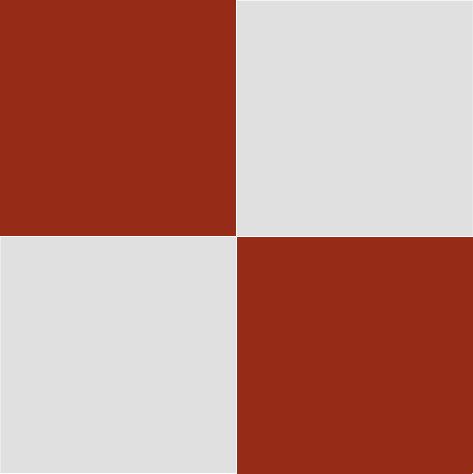

The best way to deal with Disaster Recovery is to imagine the entire system is wiped out, with nothing remaining for you to rely on. How do you proceed?

Partial restorations also benefit from backups.

A DR Backup is used in emergencies when a standard backup fails to restore a system. There will be times that standard backups fail.

Even if a system used RAID disk to increase reliability, this still does not guarantee restoration. We do not use RAID due to cost.

On Amazon AWS, one creates a “snapshot” as a quick and efficient way to backup and restore from a point in time. If the snapshot copies a corrupted service, it is hit and miss as to whether it will restore correctly.

Years ago IT people were perhaps familiar with “fsck” to restore hard disk corruptions. Newer utilities replacing fsck have never restored a system for me.

Before we backup a complete system there are two checks we do. One is to verify the database appears to be okay via an mysqldump command, and the other is to go to the root directory, then do a recursive list to see not files freeze:

cd /

ls -laR

Another check is to verify no virus in the file contents. One could make a tar backup, download it to a PC and run anti-virus software on the file. I have noticed in recent years that sometimes a .tar file download from FileZilla to a Mac PC will not verify. This is why it is important to use tar “cvf” to verify downloaded files. If this happens, we can use the “aws s3 cp file s3://bucket” syntax to copy the file to a nominated bucket and download via the bucket’s console page.

While good to use, WordPress plugins have not always detected a virus. The message here is, do not trust or assume, rather take your own steps to do a full DR backup.

One problem has been that a hosting provider may have used what is now an old version of mysql. When one tries to re-install to another provider, or even with the same, the database may not import. This can be alarming. To fix this, one could recreate an older website with non-essential WP plugins removed from both the directory files and the database, optimise the database, then try an export and import. Another way is to go into more manual methods by having the new and old running in tandem, fixing up the new sites until it is fully running. For example, export pages, make global replacements for a new domain name, then import the pages, manually recreate the menus and widgets, then other plugin configurations. One may use a text editor to make global replacements in a database or exported files when changing the domain name for the same website content. A new domain can readily import the media files from an old website that is up and running. What is easy to forget are the menus and widgets, and the global theme settings and CSS master files.

When companies design their disaster recovery backups, there is to my knowledge no expectations for a complete foolproof method, rather an approach to reduce risk of loss. Of course this is still not good enough for certain classifications of companies and data. This is why they may have expenditure and staff working on backups and processes.

I have restored company systems at various times truly being on the precipice of losing their data. I never lost a system but this was in part due to people helping me or my own decisions. In the earlier days of technology this was a huge problem.

DR is not only about hard disks and data. It is physical as well. One company had power backed up by banks of batteries. The power went out, but the batteries were not charged. The computer room was blacked out.

The industry has its own ongoing practices. In years gone by people built data centers with pop-out roof domes for an internal explosion, explosive proof columns, roof tops that could take a helicopter falling on it, and diesel engine power. These never came into actual use in Australia, excepting power. Herbert Street in St Leonards, Sydney could use it’s power backup for the North Shore Hospital. Those systems are decommissioned.

There are WordPress backup plugins that are convenient and very useful. Even those plugins have failed. Throughout my IT career, I have been continually seeing where things fail, so perhaps I have a knack for it.

Given these problems we should do a few things, as once data is lost, there is no way to recover it. In the 1970’s I recall one University student crying in the computer room when his data was lost from a floppy disk. I have several stories on data loss.

This is my advice…

If possible, have an archive or development site with a copy of a website. This will become out-of-date though.

In Amazon re-create snapshots once or twice a year, or after major work.

Always use the cd /; ls -laR technique first before a backup, and use the mysqldump command to verify the database.

Once a tar file backup is done, do “tar tvf” to list the files to verify there is no obvious corruption.

If a site has been infected by a virus, my experience is that one should not restore but rebuild.

One should not keep unused WordPress plugins.

One should never install a WP Plugin outside of the recognised WP repository.

Never add another application onto the same database. If that application is compromised, the WP database or hard disk files can be compromised.

This means installing different programs on different server instances. I have yet to work out how to install two separate databases on one instance, as all documentation I have used to date fails.

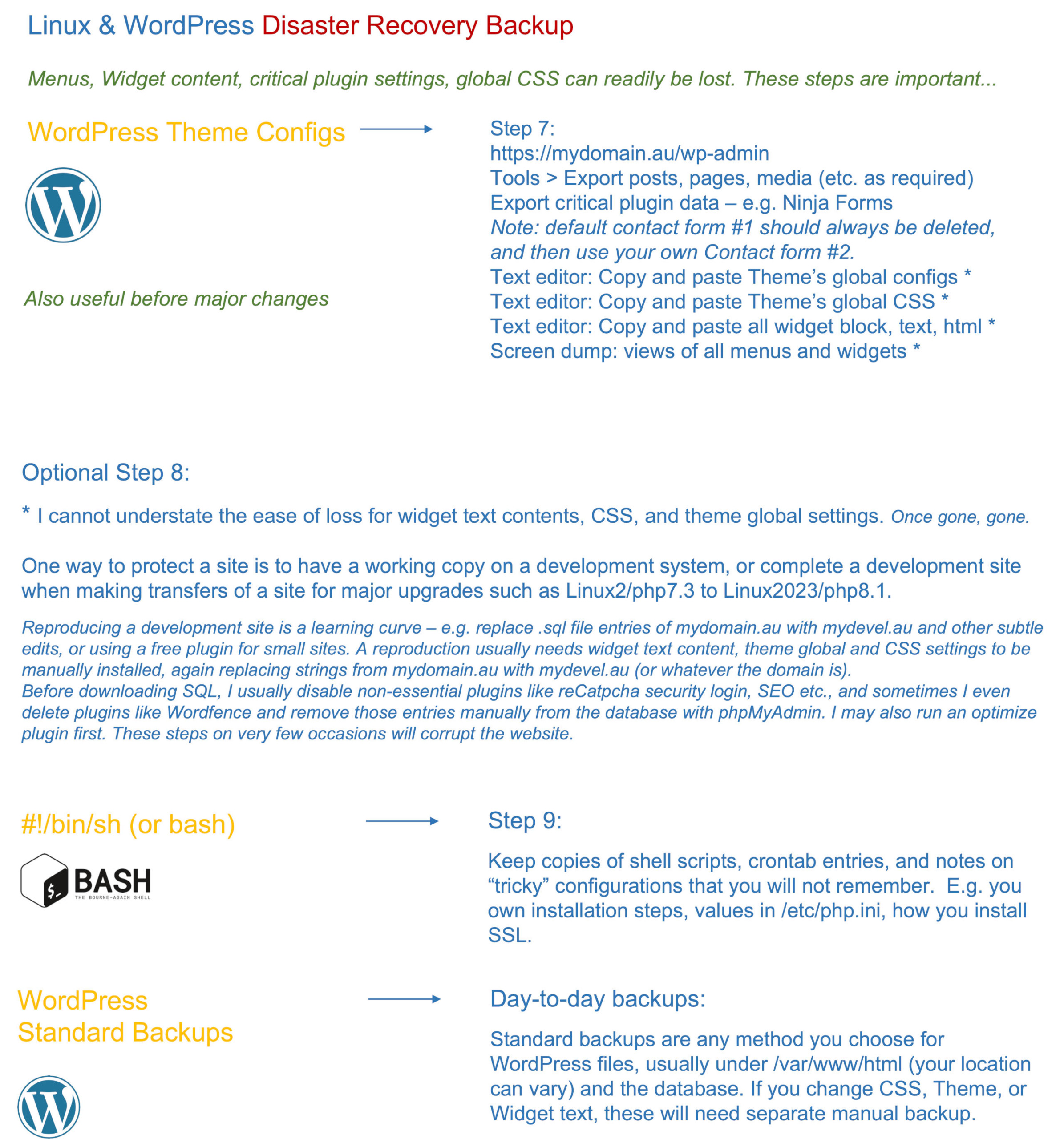

Make a local PC copy of the exported WP files (that is, pages, posts etc.) a tar backup of /var/www/html, a database backup, if possible a database backup excluding non-essentials such as Wordfence or W3 Total Cache, exports from plugins like Ninja Forms, an export of the theme’s “master” configuration file, a text file copy of the global CSS that you may have added, installation notes you kept, installation files such as SSL certificates, possibly tar backups of some config files for future reference – for example, /etc/letecnrypt can be fully backed up and restored, your personalised settings for php.ini, ssl.conf, and so on. You work out what these are, and of course crontab entries, and your shell scripts.

As one example, if you use openssl to create SSL certificates, you must keep installation notes as you will forget how to do these things in months ahead. Or, you may have special language files inserted into certain WP directories. You cannot expect to remember these details., including external configurations for DNS, e-mail and so on.

When you extract a tar file from WordPress, you can always look at wp-config.php to see what you used for a user name and password. However, it may be helpful to write down WP users and login details as well.

As a note, tar backups are best done like this:

cd /var/www/html

tar cvf /home/ec2-user/backup.tar ./??* ./*

We use the ./ style. If we wanted to backup a directory, for example:

cd /etc

tar cvf /home/ec2-user/certbot.tar ./letsencrypt

Save all such files to your PC or S3 buckets.

After these detail are completed, of course use Amazon for your snapshot.

As you can see, the DR backup is far more than a regular database and Unix .tar file backup. This methodology shows you have been diligent and should not be held liable for any future failure if for some reason things sill cannot be restored.

Our simpler systems are not meant to use RAID disk or real-time cascade fall-overs, so at some point we have a limit on expectations.

I am aware of designers who have no awareness of data protection, who therefore are of no surprise when showing no conscience over permanently lost customer data.

When you update a client site, it is up to you as to what backups you make prior to maintenance or upgrade. Minor upgrades and fixes have lower risk. Running an optimisation program is higher risk. Upgrading to a new version rather than a release is higher risk.

Standard backups such as files and database can be reassuring prior to making a number of reasonable changes.

Please keep in mind that WP Plugin upgrades can introduce degradation of page displays, or other issues. This means we don’t kill the messenger by thinking something else is bad when it isn’t. We look to solve problems by elimination and mitigation. It is advisable though to use t4g.micro instances in preference to the lowest performing t4g.nano instances.

One subject of interest is the use of CDN. Why would a simple website predominantly used in Australia need CDN for the sake of GTMetrix scores? If you are global and have a higher level of demand, then it makes sense. I have found a website should be stable and working well before flicking the switch to include CDN. There is no value either, in my view, of having WordPress image files on S3 buckets. Keep things as standard as possible, meaning least maintenance as a policy in your builds. What is exciting now may be a burden or failure down the track.